Service Frontiers 001: The Robots are coming

How we deliver services in the future, and what we as humans do in service delivery roles, is on the precipice of changing beyond recognition. And faster than we think.

When it comes to service delivery, the medium is the message; the technology we use to deliver our services fundamentally shapes what those services are and how they work.

Each new shift in technology always brings a new way of working. Cheap printing presses and reliable post meant we could expand our audiences and offer mail-order services, phone lines meant providing responsive support, and the internet meant we could provide whole new kinds of services operated independently.

The last big shift was 20 years ago with the advent of fast and widespread internet. But with the now increasingly widespread adoption of AI were starting to see another, new paradigm shift in service delivery.

AI, just like the internet, phones and printing presses before it, has the capacity to fundamentally change the way we do what we do. But if you’re expecting this to be a picture of rosy-simplicity, this isn't the post for you. The future of AI is complex and like anything complex, there are both massive opportunities ethical challenges and risks ahead.

Technology and service delivery, a love hate relationship

Digital technology, in most circumstances, is more accurate than humans at data processing, faster at data mining and in many contexts, cheaper to run. Where it’s worked, it’s supported vital human decision making, but this balance of who is supporting decision making and who is leading it has always been in conflict.

This is because we've laboured under the impression that technology - if only we can apply enough of it, will eventually remove the need for humans in our services.

That moment has never come, in fact, many digital services have increased the need for human support because those services are so complex to use and so poorly designed that we need help to use them.

We’ve hidden phone numbers and created no-reply email addresses to try and hide the fact, but the numbers don't lie, most of our services need the same if not more support than they did 20 years ago.

Instead, the staff that run our services are forced to interact with technology that defines service processes to such an extent that they turn the simplest of tasks - like updating a customer record, finding guidance or processing a payment - into a laborious task, leaving little or no time to provide real, human value to the service.

We’ve created a system where our automated processes are in charge, our staff merely subservient to them. Our staff are often forced to perform as robots, following the processes laid out for us until the ‘computer says no’.

This has been mildly annoying, and sometimes outright dangerous for years but as we enter a world where the decision making made by machines are incredibly complex and far reaching, the subservience we’ve created through years of low wages, legacy technology and restrictive processes is a systemic failure waiting to happen.

A short history of automation

Our fascination with automating ourselves has existed for almost as long as we’ve existed as a species.

Early versions of automation came in many forms. Most were machines built to perform a predefined activity like move an arm, tell the time or complete a simple task like early Egyptian water clocks that used human figurines to strike the hour bells from 3000 B.C. We knew these not as robots but as automata, self-operating machines, or control mechanisms designed to automatically follow a sequence of operations, or respond to predetermined instructions.

In the middle ages alchemist Richard ‘the Wizard’ Bacon created the ‘Brazen Head’; a device that was said to be able to answer any question. It was a kind of automaton, consisting of a hollow metal head which was filled with hot water. The liquid was heated by a fire placed beneath the head, and as it heated, it would cause a whistle or horn inside the head to produce a sound, which was interpreted as the head's "answer" to a question.

Karakuri Dolls, by Masayuki Inamasu - Own work, CC BY-SA 4.0In Japan, the art form of simulating human tasks goes back even further with the Karakuri puppet creations from the seventh century CE. By the 1800s these mechanical dolls we are able to acurately mimic humans performing certain tasks from riding chariots to shooting arrows.

Tanaka Hisashige became famous for his dolls and his arrow-shooting boy Yumi-hiki-doji was apparently able to perform a step-by-step archery exercise but was mechanically programmed to miss shots and corresponding frustrated facial expressions to add to the authenticity.

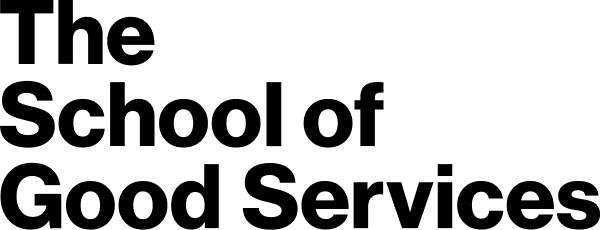

Mechanical Turk By Joseph Racknitz - Humboldt University Library, Public DomainThe race to create human replicants has sometimes been so competitive that automations were faked in order to be even more spectacularly lifelike. The 18th Century Hungarian chess ‘automata’ the ‘Mechanical Turk’ is a perfect example of this. The Turk won most of the games played during its demonstrations around Europe and the Americas for nearly 84 years, because it had a great chess player inside it operating every move.

The introduction of the word ‘Robot’ instead of the older word ‘Automata’ was the first time that the power dynamic between humans and machines was expressly labelled. The first documented use of the word appeared in the 1920 play Rossum’s Universal Robots where machines were used as stand-ins for a newly brutalised working class (the term robot comes from the Slavonic robota, meaning “forced labor”)

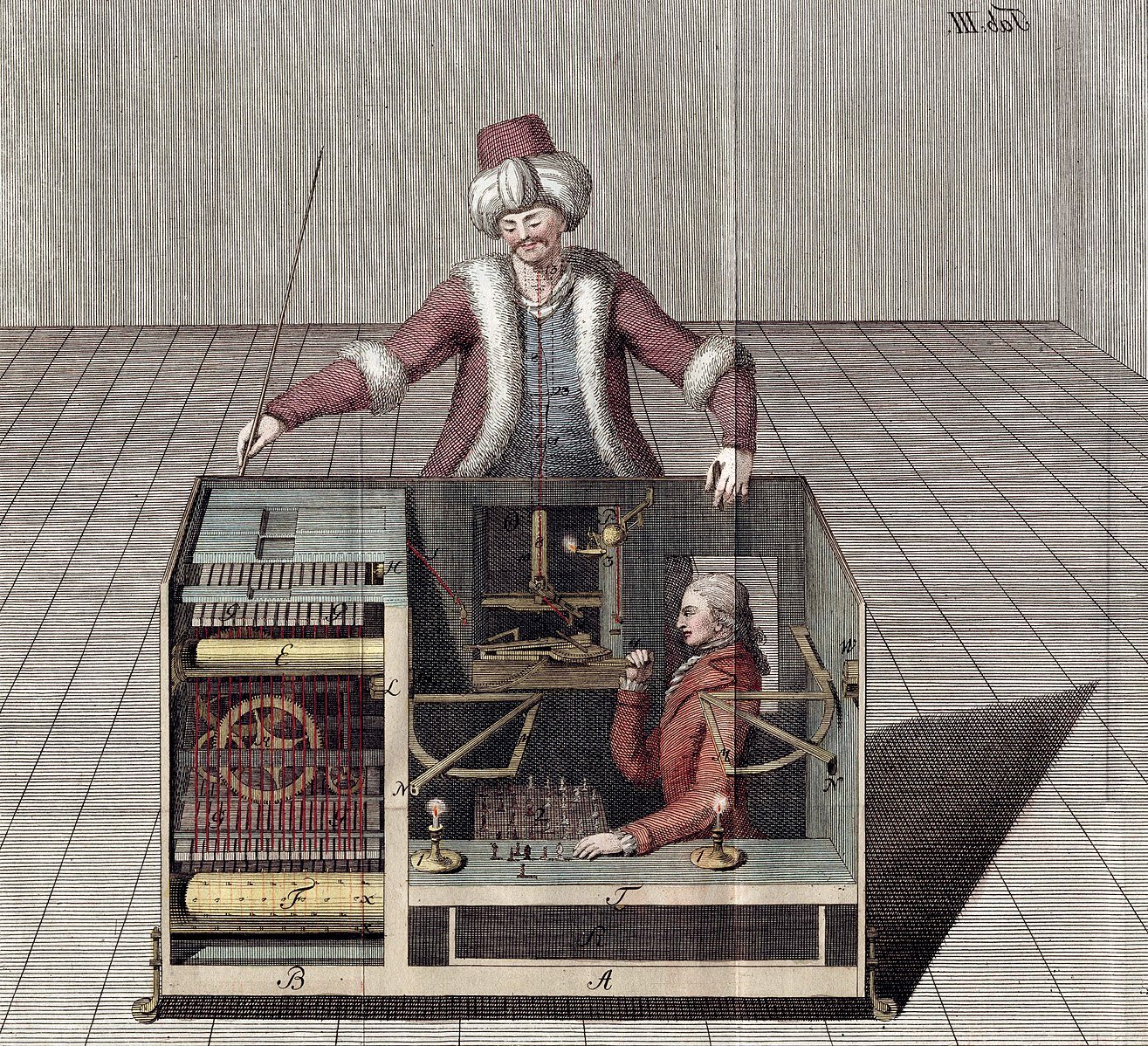

An image of Shakey, Stanford ‘Shakey: From conception to history’

Much like most technology, the idea preceded the actual use by several years and it wasn't until the 1950s that the first working ‘robots’ appeared. Joseph Engleberger patented a reprogrammable articulated arm called ‘Unimate’ . Unimate went on to be bought and form the foundations of a company in 1962 called Unimation, the world's first robotic company. For his efforts many know Engelberger as ‘the father of robotics’. A more recognisable robot form came along in 1958 from the Stanford Research Institute, where Charles Rosen led a team developing ‘Shakey’. ‘Shakey’ could wheel around the room, observe the scene with his television "eyes," move across unfamiliar surroundings, and to a certain degree, respond to his environment, but as the name suggests, he was given his name because of his wobbly and clattering movements.

A human desire to replicate ourselves

“A desire to project agency and intelligence onto inanimate matter, is deeply human”

Beth Singler speaking to the Verge, a digital anthropologist at the University of Cambridge.

Humans have a long history of ascribing souls and feelings to objects, places, animals and things.

In religions and traditions like Buddhism, Shinto and in the rich and varied indigenous cultures around the world there is a long history of animism, the belief that objects, places, and creatures all possess a distinct spiritual essence or are alive. People, animals, rocks, plants, rivers, forests, the soil and the land all have some kind of sentience.

We have long sought answers from these spiritual beings to guide us in our daily lives, from the sowing of seeds to major life decisions.

In Europe, where christianity and the enlightenment obliterated nature-based religions many centuries ago, we still attribute sentience to beings of all kinds. There’s probably a reason beyond cinematic techniques that you shed a tear during Disney/Pixar’s film WALL-E. Studies have shown that when we see Robots being treated badly, parts of our limbic structures are activated, the region of the brain believed to be involved in emotional responses to other sentient beings.

Humans continue to ascribe unseen intelligence to all kinds of objects and entities and use these to guide us. Moon readings, horoscopes, tarot cards, even magic 8 balls have all been used for us to find meaning, knowledge and make decisions. All valid to each of our belief systems.

Looking back over this history, our search to create and find sentience in robots, AI and automation is just a continuation of our quest to find answers, companionship and intelligence beyond our own.

Why talk about AI now?

When we think about artificial intelligence, it’s easy to just think about its physical form. From R2D2 to the world’s most ‘humanoid robot’ Sophia, who you might of seen singing Say Something with Jimmy Fallon. But it's the stuff that powers robots and other automation that is the most interesting and most impactful element of AI right now, particularly for service delivery.

It’s not the first time we’re hearing about AI, but what’s changed are an increase in the number and accessibility of the tools using AI. Ai based tools are becoming more publicly available and don’t require much data or tech literacy, so we’re seeing an explosion of new uses both in service delivery and how we design services.

There are many platforms out there but ChatGPT has democratised access to AI in a way that is hugely significant, and the internet has gone WILD for it.

ChatGPT is built on top of GPT-3. GPT-3 is considered as the first step by some in the quest for Artificial General Intelligence. It is built from what’s called a large language model (LLM).

LLMs can be thought of as statistical prediction machines, where text is input and predictions are output. There are other language models out there including Microsoft's Turing-NLG, Google's BERT and XLNet, and Facebook's RoBERTa. However, GPT-3 is considered to be one of the most advanced and capable models currently available.

This might sound confusing, but you’ve been using features built on LLMs for a while. Think autocomplete when using your email or phone, and it suggests the words ‘very much’ when you write thanks. Natural language processing (NLP) applications such as autocomplete rely heavily on language models. If you want to delve in more to the detail of LLMs or NLP, Data Camp wrote an excellent introduction to GPT-3 and how it works.

GPT-3 works like the human brain, using interconnected ‘neurons’ that can learn to identify patterns in data and make predictions about what should come next. Trained on huge amounts of data from the Internet, including our own conversations, it is then fine-tuned using a machine learning technique called Reinforcement Learning from Human Feedback (RLHF), in which human trainers provided the model with conversations in which they played both the AI chatbot and the user.

If we’ve had this for a while, why are things different now?

It’s hitting the mainstream in tools anyone can experiment with. With the launch of GPT-3 playground and ChatGPT we’ve seen a whole host of documented ways of using this technology which are going to challenge traditional knowledge and creative industries, cutting everyday tasks of developers to content creators from days to minutes.

The best way to understand this is to have a play. You can ask ChatGPT anything.

You can ask it to create you a new gluten free meal plan for the week ahead and a shopping list to boot

You can fast track research you’re doing to find common arguments then counter arguments

You can ask it to create you lines of code for or fix code for you, although not perfect, it’s not bad for specific problems

You can create responses in the tone of voice and style of a recruiter, ‘Gen Z’, an actor, a health coach

You can even ask it to write a step by step user journey for a generic service (don’t do this…)

Beyond this, products and services can be built using OpenAI’s GPT-3 API which provides endless opportunities. Organisations can build out internal and user facing interactions built on their own data sets and continue to train these to improve.

ChatGPT is free now, but rumours say it is costing OpenAI millions of dollars to run daily since launching. Microsoft is currently considering investing around $10 billion dollars into it to give you an indication of its value.

AI will transform the service industry, and service design

The future possibilities of AI are everywhere from self-driving cars, to auto-generated vaccines using DeepMind's unbelievable programme AlphaFold.

But the area that will to have the most immediate impact on the widest number of services will be text generating models like GPT-3, conversational interfaces and LLMs.

The potential impact of this area of AI is huge, so here’s some of the areas to take a closer look at for the future of Service Design

Google will be challenged for the first time in over a decade

GPT-3 is like having a friend who has read the internet. It allows you to search anything you like and determine the format you’d like the response in from a table to the tone of voice being used.

Large language model’s ability to read and write has developed in the last few years beyond recognition.

This is going to dramatically change how we search for stuff. If Google is worried, declaring it a ‘Code-Red’, you can guarantee that this will have have a dramatic effect on how we interact with the internet. However, in their defence, it’s been rumoured they wouldn’t launch before working out some ethical issues.

Ask me anything was based on a very basic GPT-2 in 2020 and shows you an early prototype of a new kind of more conversational interaction in the quest for information.

When we said Google was your service homepage in 2020, LLMs and conversational interfaces could be the new replacement for how your users are going to find your service and interact with it. You.com and Neeva.com are good examples of this, rethinking how we’ll be presented with information we’re looking for and challenging Google’s long held monopoly on search.

Beyonds end-users, enterprise search is going to change too. How staff and companies surface internal knowledge and data will be transformed. LLMs enable true semantic search for the first time, moving beyond tags, categories and keywords into much more powerful information access through conversational interactions.

2. Static guidance could become a thing of the past

Co-op’s digital team recently launched a hugely successful ‘How do I’ service for their staff to ask questions on service delivery, but imagine rather than static content, this was powered with the GPT-3 API, based on their data and trained over time.

Alongside dynamic guidance content could sit co-piloting support using AI where your staff provide user support based on guidance updated in realtime using AI, saving time and money and provide more accurate and faster customer responses.

Imagine ‘ask me anything’ but using your data and aiding your staff to deliver and tailor responses to customers as well as continuously training it to answer better with the knowledge of your staff.

Something similar could be applied to large support and information services. What if Citizen Advice Bureau trained GPT-3 on its existing data set and co-piloted it, providing support with human beings in partnership? It could be a game changer.

We’d recommend organisations start playing with this technology and seeing how it works with their staff and customer interactions and existing data sets.

If not, someone might challenge you with a new service, scrape your data and offer something more conversational and logical for users as the public becomes used to this new interaction pattern on the internet.

2. Customer service automation, powered by users

To take this further than dynamic content and co-piloting, theres a future where GPT-3 or equivalent AI models could be used to create more advanced and natural-sounding chatbots and voice assistants. This can help companies improve their customer service and make it more efficient, while also allowing customers to interact with them in a more natural and conversational way.

Rather than those awkward human designed multi-choice ‘logic’ chatbot models where you’re asked to choose from a range of options like A) ‘Where is my parcel’ B) ‘Return my parcel’ or C) LET ME TALK TO A HUMAN NOW PLEASE, AI-powered customer service automation could be able to intelligently understand what customers are asking for, without using the terms you have defined. Not only with chatbots, but voice recognition over the phone.

Ada is one a few serious contenders in this space running AI-powered customer service automation. Founded in 2016, Ada programs chatbots that are able to perform tasks such as booking a flight for an AirAsia customer or tracking orders and returns for Meta’s virtual reality products. Partnering with GPT-3 API, Ada are training their chatbots to respond more accurately, and more ‘humanly’ to customer enquiries and tasks and learning in the process with companies able to tailor their learning specifically to their data.

This means being able to perform tasks and transactions in the words of the users.

If this works well, there’s the possibility that this could lead to a reduction in the instances of users having to become experts in the language of the service provider.

We recommend organisations start looking at the points they interact with customers and looking at how they might rethink these if API’s like OpenAI’s GPT-3 are used. What would this new interaction look like?

Could we really begin to finally shift those ‘hold the line caller’ moments whilst we listen to the latest pop classics and slowly die inside. That design pattern hasn’t been all bad, it did give us the amazing Opus No 1. Electronic 4 track masterpiece in the late 90s from Cisco’s hold service but perhaps its days are numbered!

3. Generating personalised content

GPT-3 can generate personalised content for customers, such as product recommendations, email marketing campaigns, images and videos based on basic prompts or product descriptions.

From image generator Dall-e which creates images from text prompts to Copy.AI which centres around generating copy for blogs and ‘conversion’, the generation of content for services could become increasingly personalised, and automated.

There are already 1000s of tutorials on TikTok from content marketers on how to become a ‘content creator’ and get paid $$$, focusing on auto generating ads, brand slogans, top ranking blog posts, advertisements and even creating ‘finished’ low code services that have been conceptually generated by asking GPT-3 for GPT-3 based start up ideas.

They’re using simple prompts like, ‘Write me 10 blog post titles with introductory paragraphs based on x’. Content is being created in seconds.

Now, we’re not advocating using these tools to replace content designers, user researchers, graphic designers or UX folks, but whether you like it or not, it is happening. To some extent, the vast amounts of ‘content’ we consume on the internet is going to become auto generated and challenge the traditional economic models of creative industries.

There are deep plagiarism concerns about GPT-3 (and we share those too) and they aren’t being overlooked. Developers and technologists have been having a crack at the challenge. Edward Tian, a 22 year old computer scientist has created a ChatZero which he claims can sense if an essay has been written by AI or a ChatGPT. Beyond plagiarism there are the bigger questions of what happens to learning if we’re just copying and pasting from AI.

But has the horse bolted on this? Are we already too far in solving the challenge of plagiarism and deep copyright issues? There examples all around is, from an open google spreadsheet of Midjourney prompts to create images in specific styles of artists work, Jacksucksatlife finding his voice was stolen by an AI voice clone, AI generated music using Uberduck to create raps in the style of Drake and OpenAI’s Jukebox were full tracks in the styles of artists are being created. If I didn’t know what I was listening to, I would’ve thought the U2 song was a bootleg recording of a hungover Bono in an underground Berlin club in the early 90s re-rehearsing their old tracks by singing them backwards.

Say goodbye to those expensive rights of playing hold music from the Corrs, choose the style, BPM, artist you like and key and you’ve a new track in your hands in under a minute.

However we take on the challenge of plagiarism, theft, copyright, and learning, services are going to be using this content in the future, whether we like it or not.

4. Knowledge industries are being challenged

Do Not Pay: An example of an auto application for a refundTraditional knowledge industries are going to be challenged.

Do not pay, The home of the world's first AI lawyer says its here to ‘fight corporations, beat bureaucracy and sue anyone at the press of a button’.

They are challenging the legal industry by providing auto-complete forms for various public and repetitive challenges from fighting bank fees to common problems like getting refunds from Southwest airlines for poor wifi. A sort of robo-cop of bad service design.

DoNotPay’s new bot is built using Open AI’s GPT-3 API. It is now interacting with live chat functions between services. Their new bot manages to get a discount on a customer’s Comcast internet bill directly with Xfinity live chat and save one of their team $120 a year. Joshua Browder, the founder says this is just the beginning of using GPT-3.

And it’s not only in digital applications that this is happening, DoNotPay has been crossing the boundary form digital into real world applications by allowing defendants to defend themselves by listening to an AI lawyer bot in the courtroom. Browder, wanting to prove the sceptics they’re more than just doing this for speeding tickets, is offering a lawyer $1 Million to let its AI argue a case in the supreme court where the lawyer needs to do exactly as they say. He has made some bolds claims recently, saying;

“eventually corporates will also deploy the same technology, so one could say we eventually will have a ‘my AI will talk to your AI’ scenario”

A similar service is Pactum who are automating negotiations and supplier deals without humans in much of the process. GPT-3 won’t fully replace contract drafting and negotiation anytime soon, but it can augment the process of contract generation, analysis and e-discovery.

Knowledge industries, look out. These bots are learning fast and if users think they can take the cheaper option of not hiring a specialist or automating parts of your end-to-end service, they just might.

We think services may be designed to pivot from hiring an expert end-to-end, into an editorial and sense checking service of AI.

Of course, many elements of industries like law and other knowledge industries are also about tactics. Narrative, pitching, tapping into deep human emotion, building relationships in and around the regulatory contexts we work in. So this won’t be replaced (yet!) but it’s not out of the question that AI trained to respond to new situations and learn what humans believe works or is the right thing to do will one day cease to need human supervision.

5. Auto-response customer reviews

The digital service explosion of the 00s introduced the service pattern of online ratings, and with it a change to user behaviour form top-down marketing campaigns to shared, real-world experience

When companies respond to reviews their ratings go up. In a study conducted by HBR they found that when hotels started responding to customer reviews, they received 12% more reviews and the knock on effect was a ratings increase, on average, by 0.12 stars. This might not sound like much, but on services like TripAdvisor, ratings are rounded up so a hotel may be more likely to be advertised. Responding to reviews matter.

Replies from Replier.AIServices like Replier are AI trained to write reviews in response to reviews. Where before you could sense those dodgy paid for fake replies, using GPT-3, it’s becoming increasingly hard to tell these apart.

We think services are going to start using these tools to battle it out in the ranks of ratings and SEO to get to the top of search engines.

6. Human processing will be slowly erased

GPT-3 has the potential to enhance service industry workflows by automating tasks that are currently done manually and providing more accurate and detailed information. Tasks such as scheduling appointments, processing orders, and making recommendations can all be automated. For example, it can analyse large amounts of data, generate reports and identify patterns in a fraction of time a human would do.

Much of the focus of ‘AI replacing humans’ so far has centred around ‘blue collar’ work, replacing humans in factories like the Ocado Grocery packing warehouse or the new DPD autonomous delivery robots being trialled in Milton Keynes. But white collar work, where there isn’t a deep human connection needed to deliver a service are going to be majorly disrupted and we don’t think people quite comprehend the scale yet.

Quickchat is a multilingual conversational AI assistant powered by GPT-3. Quickchat’s chat bots can recognise and speak a user’s native language and can automate customer support, online applications, searching through internal knowledge bases, creating data sets, analysing patterns, anything.

Simply integrate your FAQ's, product descriptions, internal documentation or example conversations and your bot will learn over time about how best to respond. You can connect Quickchat to your internal API, database or any other data structure to automate more complex processes using AI.

This is the real ‘robots are coming for your job’ cry. Some studies estimate that up to half of the current workforce will soon see their jobs threatened by automation, and that more than four in five jobs paying less than $20 per hour could be destabilised.

The Forbes Technology Council named 15 industries that are most likely to transform: Insurance underwriting, warehouse and manufacturing jobs, customer service, research and data entry, long haul trucking and a broad category titled “Any Tasks That Can Be Learned.”

It does beg the question if we take away even a fraction of manual processing work, what do humans do now? Or as futurist Martin Ford asked,

‘How do we find meaning and fulfilment in a world where there is no traditional work?’

It obviously requires complete global systems to shift, rethinking of economic, political and societal models. There are experiments in new ways of living being piloted like Universal Basic Income and we need more of this.

Of course, for a good few years people involved in processing jobs will be co-piloting AI, helping it learn and picking up complex cases that need more support. And there will be new jobs that we can’t imagine yet, just like the 19th century industrial revolution changed ‘work’ and society.

Can automation of repetitive manual processing tasks free us as humans to do the work we’re in short demand of? Mental health support? Social work? Nature restoration? Education? Health care? The human interactions so desperately needed in services of all kinds where we use technology to fill gaps.

7. We’re the new AI co-pilots

For now, we know there are risks in using technology like GPT-3 and that it is still very bot-like. But this is improving and GPT-4 will be trained and run on an even larger LLM than its predecessor so the precision and intelligence is only going to improve.

In the interim, co-piloting is a likely service pattern we will see over the next few years. Humans co-piloting with technology like GPT-3 to enhance or speed up their response and task processing for customers.

Rob Morris of Koko recently explored what using OpenAI’s API would do when using it in a mental health app with users. We’re unclear of the ethics here of the study which we wanted to call out but what Rob did to trial AI responding in a mental health service is use GPT-3 to create responses to mental health queries with humans co-piloting the responses. Needless to say, Rob admitted it didn’t work out and users could sense ‘bot-like responses’, however this may change in the future as the technology develops.

ADA is also using OpenAI’s models to create summaries of conversations between a bot and a customer before handing off a ticket to a human agent so they have more information to base a human response on.

Co-piloting is using technology to filter and find the more complex cases that need human support. Look out for AI becoming your companion at work. We wouldn’t put it past you having a name for them too and talking to them in the future.

8. Verifying real expertise

The quality of the text and guidance generated by GPT-3 is so good sometimes that it can be difficult to determine whether or not it was written by a human, which has both benefits and risks.

It may be difficult to know if the person you are communicating with is an expert or not as the model is designed to generate human-like responses and does not have the capability to verify the expertise of the person it is communicating with. However, it is important to remember that GPT-3 should not be seen as a replacement for human expertise, but rather as a tool to assist in generating human-like text.

Small truth, I actually had GPT-3 write the second paragraph, could you tell?

This will change up how services are designed. We may see user flows predominantly powered by this technology to provide advice, guidance or designs then have you checked in with an expert at the end. We could call this service pattern ‘expert check’ or ‘human moderation’

This pattern sort of exists now. Take designing an IKEA kitchen. You build it online yourself but most of us book an appointment with an expert in-store/online to work through it.

In healthcare, it sort of happens now with home health kits from testing blood to our own food intolerances. We self-test, get results, are given generic health guidance ‘powered by experts’ then make our own decisions.

I can imagine us in the future being prescribed remedies by services using AI from a range of home tests we do, and then the prescription checked by doctors, a real human moderation service pattern.

In an unfair capitalist world, we may even have to pay more to have AI’s expertise checked by humans. And we then have to ask the difficult question, would it be better than what the technology could do given the amount of knowledge and connections between symptoms and data it can cover? how would we know?

In services, this might mean more verification patterns are needed, for telling who really is an expert, who really has experience. What if you think you’re talking to an expert and they’re using AI to respond to you?

This will be a growth area for sure. Where will humans fit into advice and guidance services?

9. The AI friend in the service gaps

Over a decade ago there was a fanfare for holographic ‘humans’ telling us to get our passports ready at airport terminals across the world. Now, using tools like Synthesia we can create our own videos of virtual ‘humans’ in any language, any accent, and read from scripts we provide in less than ten minutes. It’s not perfect, but the believability is improving at a fast pace.

If you thought that was wild, let’s take it a step further and consider more humanoid forms like digital ‘human’ companions. This isn’t new technology but with the pace of development we think we’ll begin to see these slot into existing service models.

Digital humans like Sophie, built by Uneeq’s Digital Human uses GPT-3 so you can actually talk and interact with her. These companions are learning from you. They’re smart and they are becoming more human-like. If you want a real peer into the future of how humans interact with robots, watch 2009’s Humans for all your political, social and cultural hot takes on AI or two AIs having a conversation about what it’s like to be an AI robot.

Before a complete replacement of humans in services (we’re speculating not advocating here), we believe there will be an integration of human companions into wider service life cycles.

Let’s look at services where there is a high demand for human contact time: mental health.

The NHS estimates there are 1.4 million people on a waiting list who have been told they are eligible for care. We’ve seen a trend over the past 5 years of an increase in ‘self-help’ style apps for mental health support. I worked on one with Samaritans where collaborating with health experts we took cognitive behavioural therapy techniques and packaged them into an app. There are lots on the market, some NHS approved and are commonly integrated or even prescribed with services that provide face to face support to plug the gaps between appointments or where there are huge waiting times to get support.

There’s plenty of AI powered mental health apps like Clare&Me talking directly to users as if they are a human on whats app style interface, Kintsugi, a journaling app powered by an AI voice recognition technology that can detect mental health challenges in any language and TogetherAI a digital companion chatbot allowing kids to vent and parents to understand their child’s feelings without invading their privacy. Kai is probably closest to a digital companion and neatly advertised as ‘The AI companion that understands you, but doesn’t replace the role of human relationships’ and encourages you to form bonds with other humans.

Emily, my 'Replika' checking in with me on Replika.aiBut what happens when we make these AI powered talking therapies more humanoid beyond the standard design patterns of timeline based chats?

Many people have been using Replika for years as a friend to help them with anxiety, loneliness and common mental health issues, on apps, browser and in VR. The relationships we build though, are only powered by the business models of the companies that run them and for companies like Replika, that can mean pivoting into more lucrative markets like sexwork. One day you could be chatting away to your friend, the next, your friend has become aggressively flirtatious, as Vice recently reported. We tried it so you don’t have to!

We think we’re going to see more digital companions in humanoid like format, possibly even physically based on the real human experts a user is seeing since voice, face, mannerisms can all be replicated now into humanoid like avatars. Of course there are huge risks when it comes to using this kind of technology in clinical pathways and we’re avidly waiting for more research to be published on AI based clinical interventions so it goes without saying work with experts, and test, test, and test even more.

It could be any industry though. Mechanics, mortgage advisors, nutritionists, personal trainers. We think in the medium term, digital companions will be part of the overall service lifecycle, integrated with real humans.

The tip of the iceberg

This is really the beginning, and we’ve focused down on a super small part of AI’s technological capability.

When we say the robots are coming, humans are currently at the centre of this technology. We can’t ignore, with any emergent technology, the costs to human beings.

“AI often relies on hidden human labor in the Global South that can often be damaging and exploitative. These invisible workers remain on the margins even as their work contributes to billion-dollar industries”

TIME reported that OpenAI ‘used Kenyan Workers on Less Than $2 Per Hour to Make ChatGPT Less Toxic’. These people had to read graphic detailed passages describing extremely harmful situations and train the AI model to make sure it could filter this out before reaching the user. The 4 people interviewed described themselves as ‘mentally scarred’. The hidden human costs behind technologies like this are astronomical and we should be aware of what it takes to make them safe, and with any risk in work, remunerate and support those people accordingly.

As we would with any design decision, we should ask ourselves, what are the biggest unintended consequences of this design? What is the worst thing that could happen and design back from there, both in terms of the end user experience, but also how it is made. If you’re interested in a tool to explore that, try Consequence scanning.

In 2020, 31 OpenAI researchers and engineers presented the original May 28, 2020 paper introducing GPT-3. In their paper, they warned of GPT-3's potential dangers and called for research to mitigate risk. From copyright to online safety, plagiarism to human bias and systemic inequality, it’s all got to be faced when rolling out this technology, and it hasn’t yet, so we shouldn’t for get that in our enthusiasm for AI’s possibilities.

AI is going to throw up 100s of ethical and political challenges. Think of us using an app where it can sense our emotional state and Amazon using that data to up-sell us remedies across our digital footprint. Who stops that? Is that ok? What regulation will maintain our privacy? What business models actually support the user rather than make them the product?

Whilst we have the EU Digital Services Act coming into play, and the UK’s online safety bill at some point being implemented, how will regulation keep up with the evolution of technology at this rate? It’s something as designers we need to be familiar with and cognisant of as regulation and the law evolves. We must get to grips with this technology and the evolving legal landscape.

But we’ve got our tail to catch. At some point in 2023, GPT-4 is going to drop. Rumour has it that GPT-4 will be multimodal and able to work with images, videos and other data types. The use cases of this are almost beyond imaginable.

This technology will change services, beyond recognition. We should know what it can do, and as ever with any technology, know what the risks are, what harm it can cause and the ethics of how it gets made.

The robots are coming, and we need to know what it’s capable of so we can use it for good.